- Home /

- Academy /

- Technical SEO /

- Mastering Crawling and Indexing for Better SEO

Mastering Crawling and Indexing for Better SEO - An SEO guide for robot tags

Many SEOs are familiar with the concepts of optimising crawl budget and blocking bots from indexing pages. However, the devil is in the details. Especially since best practises have shifted dramatically in recent years.

A simple change to your robots.txt file or robots tags can have a significant impact on your website. Today, we'll look at how to make sure your site's impact is always positive.

Take control of your website's crawling and indexing by communicating your preferences to search engines.

This helps them understand which parts of your website to pay attention to and which to ignore. There are numerous methods for accomplishing this; however, which method should be used when? In this article, we'll go over when to use each method and highlight the benefits and drawbacks.

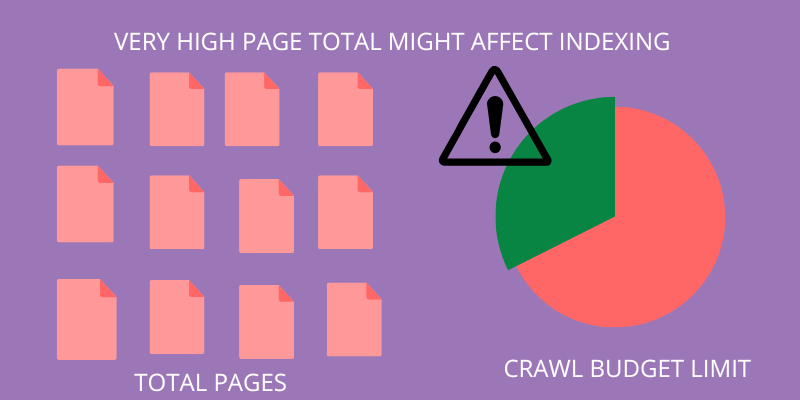

Crawl Budget Optimization

A search engine spider has a "allowance" for how many pages on your site it can and wants to crawl. This is referred to as a "crawl budget."

The crawl budget for your site can be found in the Google Search Console (GSC) "Crawl Stats" report. It should be noted that the GSC is a collection of 12 bots that are not all dedicated to SEO. It also collects AdWords or AdSense bots, both of which are SEA bots. As a result, this tool provides an estimate of your global crawl budget but not its exact distribution.

To make the number more actionable, divide the average number of pages crawled per day by the total number of crawlable pages on your site - you can get the number from your developer or run an unlimited site crawler. This will give you an expected crawl ratio against which to start optimising. Do you want to go deeper? Analyze your site's server log files to get a more detailed breakdown of Googlebot's activity, such as which pages are being visited, as well as statistics for other crawlers.

There are numerous ways to optimise crawl budget, but the GSC "Coverage" report is a good place to start to understand Google's current crawling and indexing behaviour.

Difference between crawling and indexing

| S.No. | Crawling | Indexing |

|---|---|---|

| 1. | Crawling is an SEO term that means "following links”. | The process of "adding webpages to Google search" is known as indexing. |

| 2. | Crawling is the process by which indexing is accomplished. Google crawls the web pages and indexes them. | search engine crawlers visit a link, and indexing occurs when crawlers save or index that link in a search engine database. |

| 3. | When Google visits your website for the purpose of tracking. Google's Spiders or Crawlers perform this function. | After crawling, the result is added to Google's index (i.e. web search), implying that crawling and indexing are a sequential process. |

| 4. | Crawling is the process by which search engine bots discover publicly available web pages. | Indexing is the process by which search engine bots crawl web pages and save a copy of all information on index servers, and search engines display relevant results when a user performs a search query. |

| 5. | It locates web pages and indexing queues. | It examines the content of web pages and saves pages with high quality content in the index. |

| 6. | Crawling occurs when search engine bots actively crawl your website. | The process of inserting a page is known as indexing. |

| 7. | Crawling is the process of discovering the web crawler's URLs through recursive visits to the input of web pages. | Every significant word on a web page found in the title, heading, meta tags, alt tags, subtitles, and other important positions. |

What exactly is a Robots.txt file?

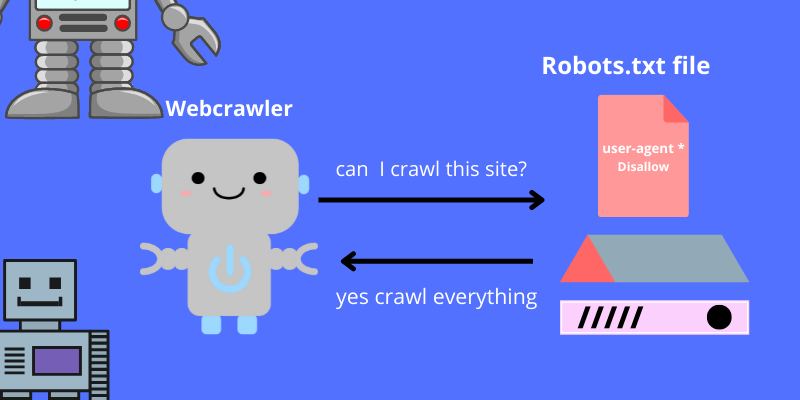

A search engine will check the robots.txt file before spidering any page. This file instructs bots on which URL paths they are permitted to visit. These entries, however, are only directives, not mandates.

Robots.txt, unlike a firewall or password protection, cannot reliably prevent crawling. It's the electronic equivalent of a "please do not enter" sign on an unlocked door.

Crawlers that are polite, such as major search engines, will generally follow instructions. Email scrapers, spambots, malware, and spiders that scan for site vulnerabilities are examples of hostile crawlers. Furthermore, it is a publicly accessible file. Your instructions are visible to anyone. You should not use your robots.txt file to:

conceal sensitive information Make use of password protection. To restrict access to your staging and/or development locations. Make use of server-side authentication.

To expressly prohibit hostile crawlers. Use IP or user-agent filtering (aka preclude a specific crawler access with a rule in your .htaccess file or a tool such as Cloudflare). A valid robots.txt file with at least one directive grouping should be present on every website. Without one, all bots have full access by default, so every page is considered crawlable. Even if this is your intention, a robots.txt file should make it clear to all stakeholders. Furthermore, if you don't have one, your server logs will be littered with failed requests for robots.txt.

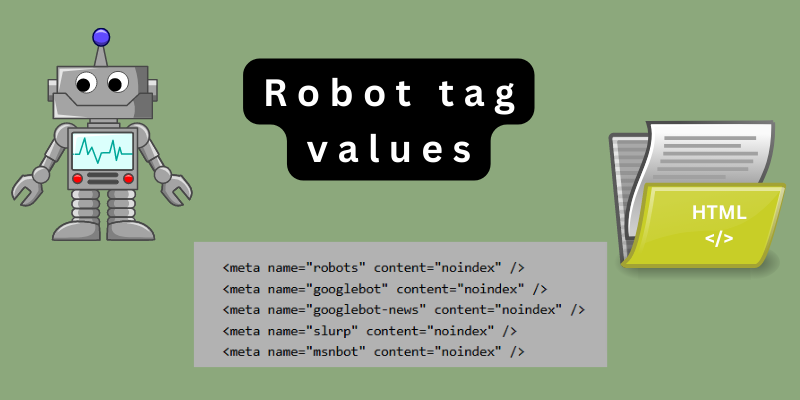

What exactly are Meta Robots Tags?

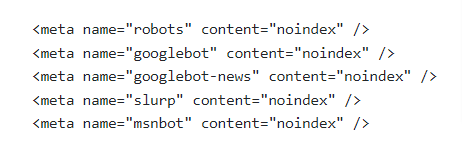

Meta name="robots" tells crawlers whether and how to "index" the content and whether to "follow" (i.e., crawl) all on-page links, passing along link equity.

The directive applies to all crawlers by using the general meta name="robots." A user agent can also be specified. For instance, meta name="googlebot" However, it is uncommon to require the use of multiple meta robots tags to set instructions for specific spiders.

When using meta robots tags, there are two important things to keep in mind: The meta tags, like robots.txt, are directives, not mandates, and may be ignored by some bots. Only links on that page are subject to the robots nofollow directive. Without a nofollow, a crawler may follow the link from another page or website. As a result, the bot may still visit and index your unwanted page.

The following is a list of all meta robots tag directives:

- index: Informs search engines that this page should appear in search results. If no directive is specified, this is the default state.

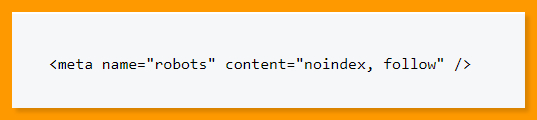

- index: Informs search engines that this page should not appear in search results.

- follow: Instructs search engines to follow all links on this page and pass equity even if the page isn't indexed. If no directive is specified, this is the default state.

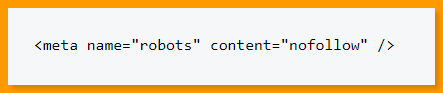

- nofollow: Informs search engines that they should not follow any links on this page or pass equity.

- all: This is equivalent to "index, follow."

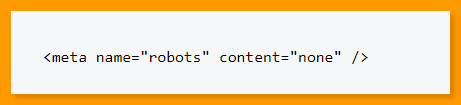

- none: The same as "noindex, nofollow."

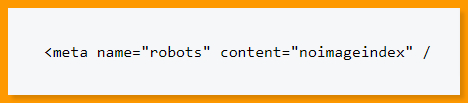

- noimageindex: Informs search engines that no images on this page should be indexed.

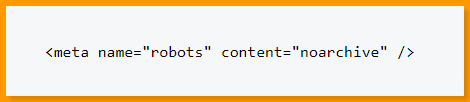

- noarchive: Informs search engines that a cached link to this page should not be displayed in search results.

- no-cache: Similar to archive, but only supported by Internet Explorer and Firefox.

- nosnippet: Informs search engines that a meta description or video preview for this page should not be displayed in search results.

- translate: Informs search engines that this page should not be translated and should not appear in search results.

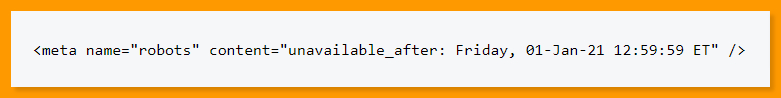

- unavailable after: Inform search engines that this page will no longer be indexed after a certain date.

- noodp: Now deprecated, it is used to prevent search engines from using the DMOZ page description in search results.

- noydir: This now-deprecated command used to prevent Yahoo from using the page description from the Yahoo directory in search results.

- noyaca: Prevents Yandex from using the Yandex directory page description in search results.

According to Yoast, not all search engines support all robots meta tags, nor are they clear about what they do and don't.

What are X-Robots-Tags Robots Directives & SEO Robots

The server sends X-Robots-Tag as an element of the HTTP response header for a given URL via the.htaccess and httpd.conf files.

As an X-Robots-Tag, any robots meta tag directive can be specified. An X-Robots-Tag, on the other hand, adds some extra flexibility and functionality. If you want, you can use X-Robots-Tag instead of meta robots tags:

- Control the behaviour of robots for non-HTML files rather than just HTML files.

- Control the indexing of a specific element of a page rather than the entire page.

- Add rules to determine whether a page should be indexed. Index an author's profile page, for example, if they have more than 5 published articles.

- Index and follow directives should be applied site-wide rather than page-specific.

- So you now understand the distinctions between the three robot directives.

Robots.txt is intended to save crawl budget but will not prevent a page from appearing in search results. It serves as your website's first gatekeeper, directing bots not to access before the page is requested.

Both types of robot tags are concerned with controlling indexing and the transmission of link equity. Robots meta tags become active only after the page has loaded. X-Robots-Tag headers, on the other hand, provide more granular control and take effect after the server responds to a page request.

With this understanding, SEOs can improve how we use robots directives to solve crawling and indexation issues.

Best Practices Checklist

It's all too common for a website to be accidentally removed from Google due to robots controlling error. Nonetheless, when used correctly, robots handling can be a powerful addition to your SEO arsenal. Just make sure to proceed with caution and wisdom.

Here's a quick checklist to get you started:

- Password protection protects private information.

- Server-side authentication is used to restrict access to development sites.

- With user-agent blocking, you can limit crawlers that consume bandwidth but provide little value in return.

- Ensure that the primary domain and any subdomains have a text file on the top level directory called "robots.txt" that returns a 200 code.

- Ascertain that the robots.txt file contains at least one block containing a user-agent line and a disallow line.

- Ascertain that the robots.txt file contains at least one sitemap line, which should be entered as the last line.

- Use the GSC robots.txt tester to validate the robots.txt file.

- Make certain that each indexable page specifies its robots tag directives.

- Check for any contradictory or redundant directives in robots.txt, robots meta tags, X-Robots-Tags, the.htaccess file, and GSC parameter handling.

- In the GSC coverage report, correct any "Submitted URL marked 'noindex'" or "Submitted URL blocked by robots.txt" errors.

- Determine the reason for any robot-related exclusions from the GSC coverage report.

- Make certain that only relevant pages are displayed in the GSC "Blocked Resources" report.

- Check your robot's handling to ensure you're doing it correctly.

Conclusion

When a website grows larger than a small homepage, one of the most important tasks is to ensure that the existing content in the Google index is as complete and up-to-date as possible. Because the resources available for capturing and storing web pages are limited, Google sets separate limits for each domain: How many URLs are crawled per day, and how many of these pages are indexed!

These limits are quickly reached by large websites. As a result, it is critical to make the best use of the available resources through smart crawl and indexing management.

Start using PagesMeter now!

With PagesMeter, you have everything you need for better website speed monitoring, all in one place.

- Free Sign Up

- No credit card required

The hreflang attribute is used to specify which language your content is in and which geographical region it is intended for.

The URL redirect also known as URL forwarding is a technique to give more than one URL address to a page or as a whole website or an application.

The rel attribute describes the connection between the current document and a linked resource.

Uncover your website’s SEO potential.

PagesMeter is a single tool that offers everything you need to monitor your website's speed.